Invoke any Azure REST API from Azure Data Factory or Synapse pipelines

In this blog post, you will find out how to call any Azure REST API in order to complement your data integration needs.

Azure Data Factory and Azure Synapse have brilliant integration capabilities when it comes to working with data. You can have various relational or non-relational databases, file storage services, or even 3rd party apps registered as linked services. You can then create datasets on top of a linked service and gain access to its data.

There are also a number of compute services you can use from within your pipelines. For example, you can invoke Azure Function, execute a notebook from Azure Databricks, or submit an Azure Batch job, among others. But sooner or later you will feel limited in what you can do with the catalog of supported compute services. Have you wondered how to execute some action on an otherwise unsupported Azure resource in Azure Data Factory? You might be willing to import an Azure SDK of choice and simply use it. But for better or worse, you can't have any programming code in a pipeline. Please stick to using activities! At first glance, there isn't such an activity...

Meet the Web activity

The Web activity is very simple yet powerful. There is no need to create any linked services or datasets in advance. This meets the need of executing an ad hoc request to some REST API. As you may know, each Azure service exposes a REST API.

Authentication and authorization

Before I show you a couple of examples, let's cover two important topics: authentication and authorization. To issue a request to an API endpoint, your pipeline will have to authenticate by using some type of security principal. The supported ones at the time of writing are:

- None (means no authentication, i.e. the API is public)

- Basic

- Managed Identity

- Client Certificate

- Service Principal

- User-assigned Managed Identity

In this post, I am going to focus on accessing Azure resources, and hence you have three relevant options: Service Principal, Managed Identity, or User-assigned Managed Identity. I recommend using managed identities whenever possible. The downside of choosing to authenticate using Service Principal is the reason for having Managed Identity: you have to deal with its credentials, i.e. generating, rotating, securing them. It's a hassle.

When it comes to Managed Identity, no matter if you use Data Factory or Azure Synapse, they both have what's called a System-assigned Managed Identity. In other words, this is the identity of the resource itself. This means that the service knows how to handle the authentication behind the scenes.

The User-assigned Managed Identity is a true Azure resource. You create it on your own and then you can assign it to one or more services. That's the only difference with the system-assigned type that is solely managed by the service itself.

Now that you have identified the security principal, you will have to create a certain role assignment in order for the operation that you are trying to execute to be properly authorized by the API. What role to assign will largely depend on what type of operation you want to execute. I encourage you not to use the built-in Contributor role; instead, follow the Principle of Least Privilege. Assign the minimal role that allows the action on the lowest possible scope, i.e. the Azure resource itself.

A few examples

Let's cover the groundwork with some examples. If you need to perform some other operation, have a look at the links near the end of the post.

There are two types of operations in Azure:

- Control-plane operations: those are implemented on the Resource Provider level and can be accessed on the Azure Resource Managed URL, that for Azure Global is

https://management.azure.com - Data-plane operations: those are provided by your specific resource, i.e. the requests are targeting the specific FQDN of the service in question, i.e.

https://{yourStorageAccountName}.blob.core.windows.netorhttps://{yourKeyVault}.vault.azure.net

Example 1: Getting all Storage Account containers via the Management API

One of the options to retrieve the containers of a Storage Account is to use the List operation of the control-plane API which constitutes the following HTTP request:

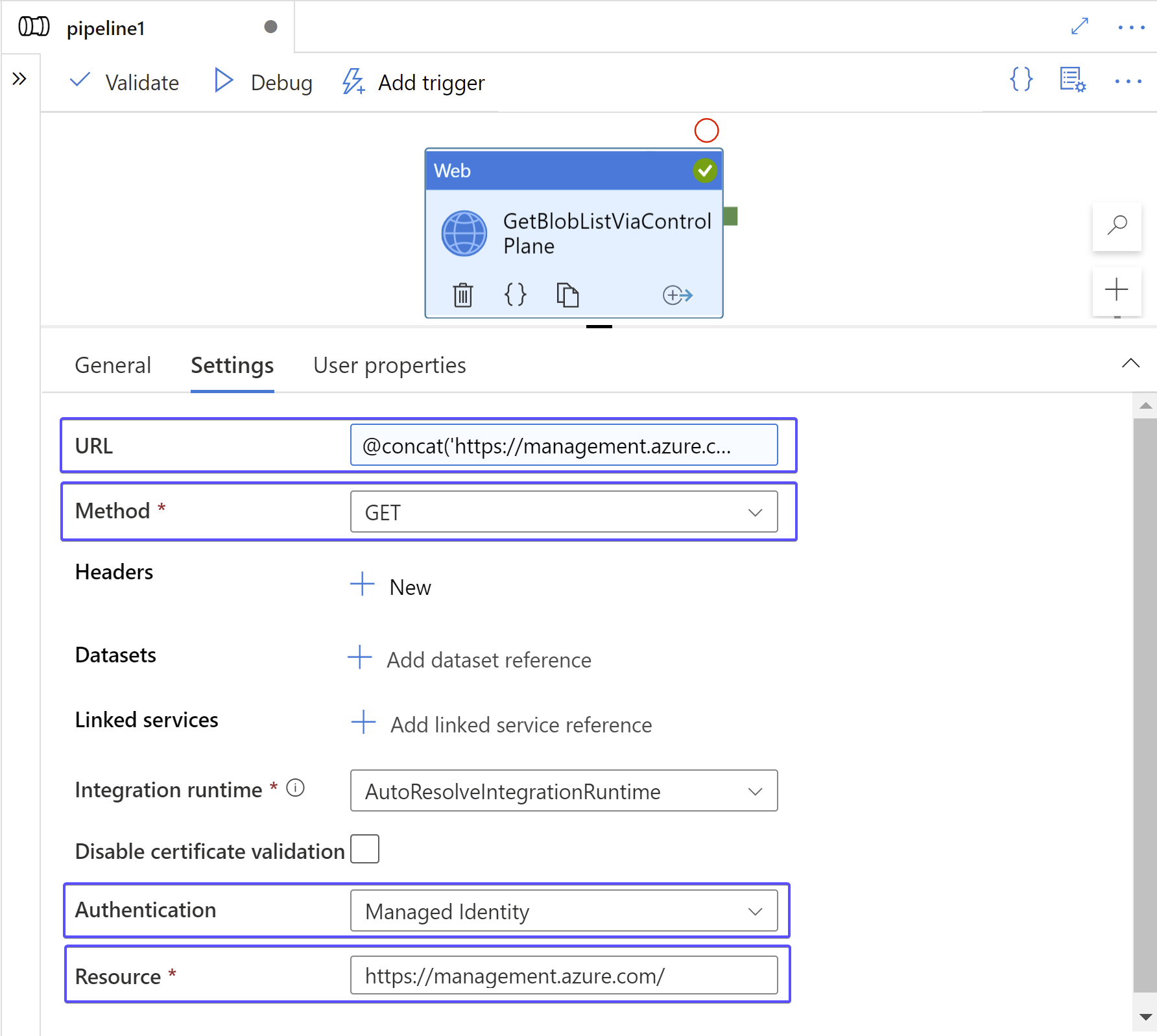

The settings of your Web activity should look like the following:

Instead of hardcoding the URL, I decided to parametrize it using pipeline variables: @concat('https://management.azure.com/subscriptions/', variables('subscriptionId'), '/resourceGroups/', variables('resourceGroupname'), '/providers/Microsoft.Storage/storageAccounts/', variables('storageAccountName'), '/blobServices/default/containers?api-version=2021-04-01').

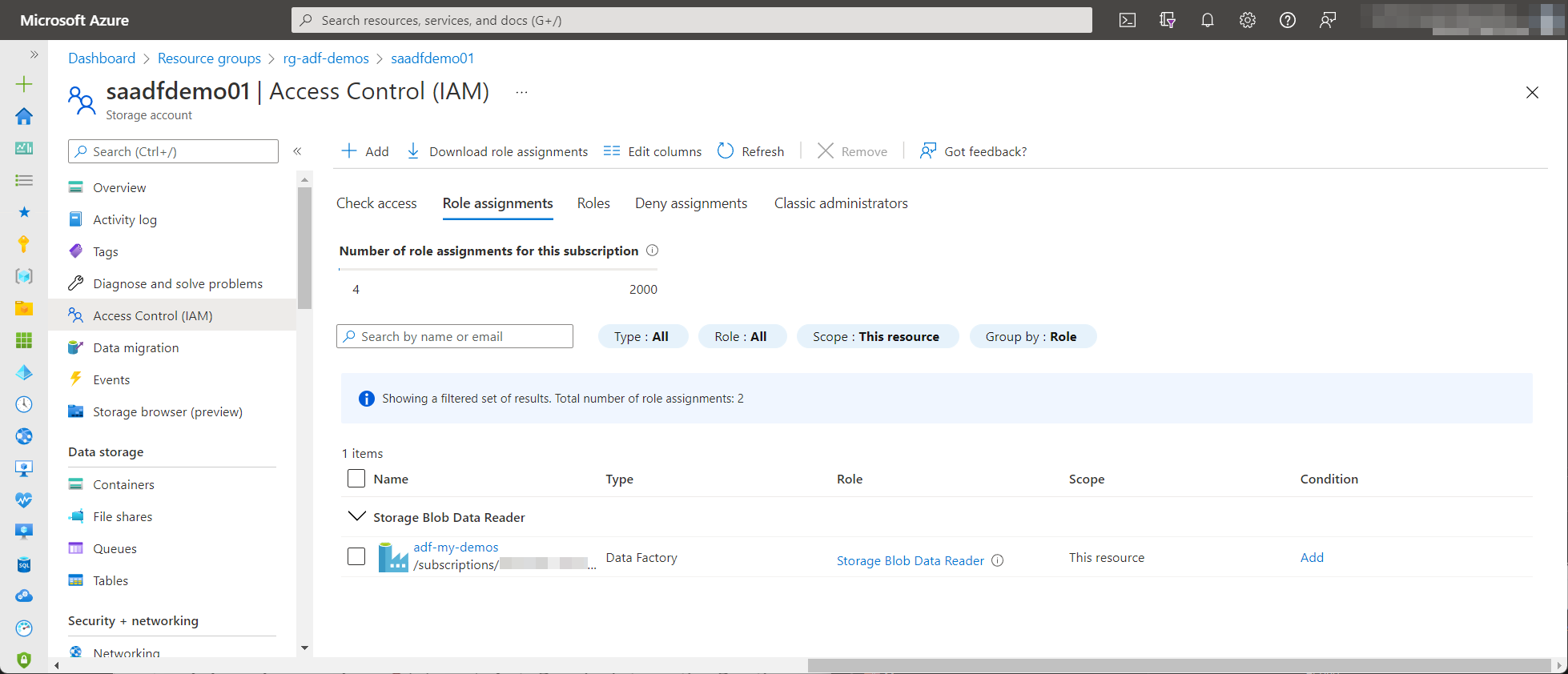

To make this query execute successfully you will need to do a role assignment to the System-assigned Managed Identity of the Data Factory or Synapse. The API operation requires Microsoft.Storage/storageAccounts/blobServices/containers/read action for which you can use the Storage Blob Data Reader built-in role. Besides the action needed, it contains the smallest set of allowed actions. The role assignment looks like this:

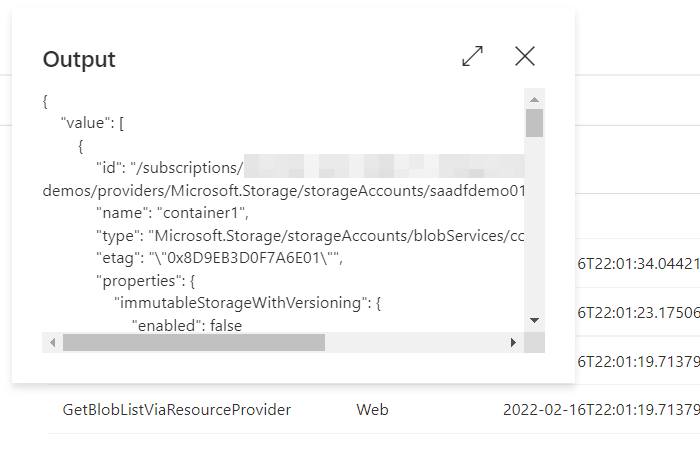

Now back to the result of the Web activity. The API returns JSON payload which means you can easily process it in the pipeline:

Example 2: Getting all Storage Account containers via the data plane API

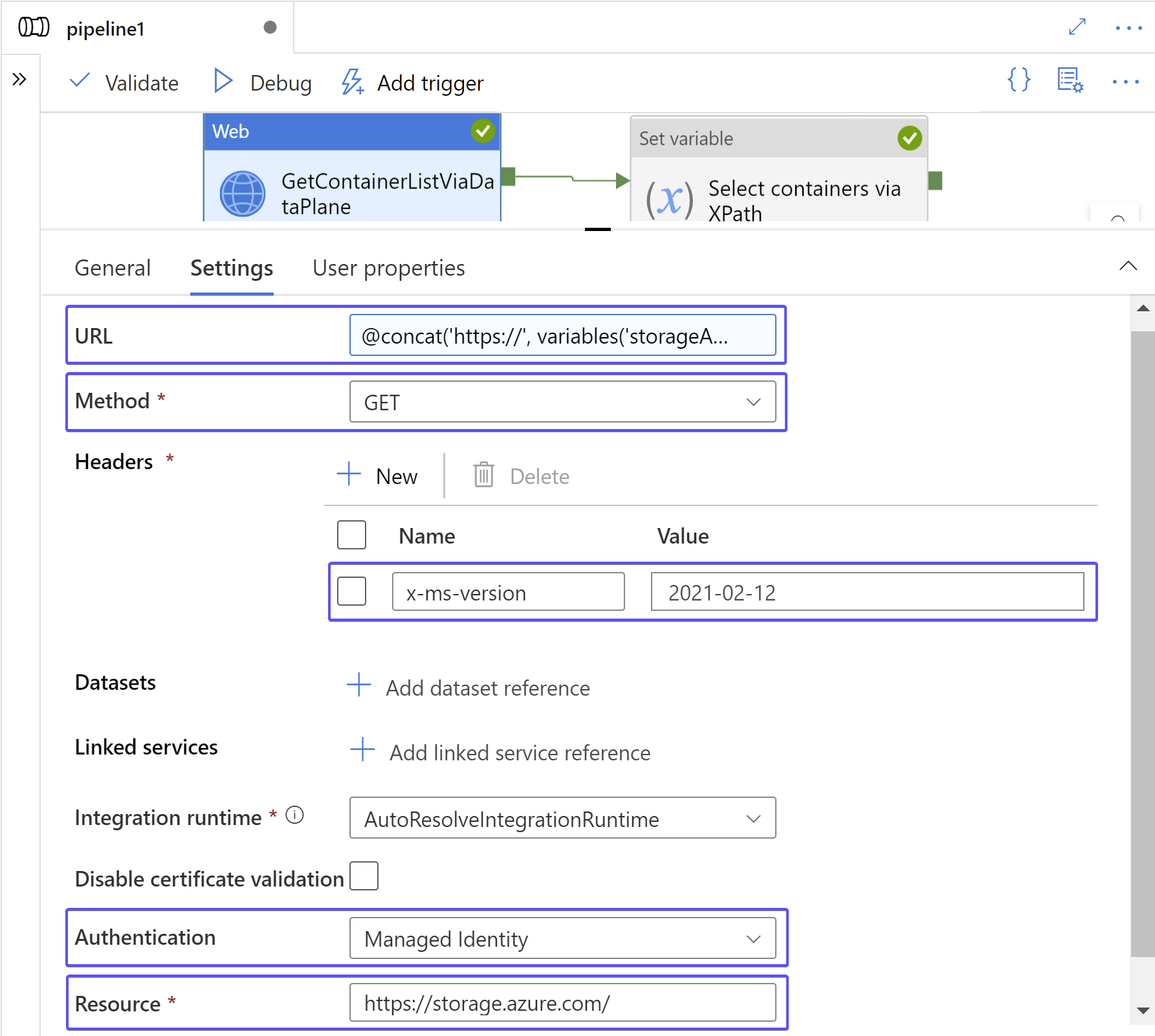

An alternative way for obtaining a list of all containers would be calling the Blob Service Rest API using the FQDN of your storage account. It's an HTTP GET to https://{accountname}.blob.core.windows.net/?comp=list. The role assignment from Example 1 will suffice. The web activity is configured as follows:

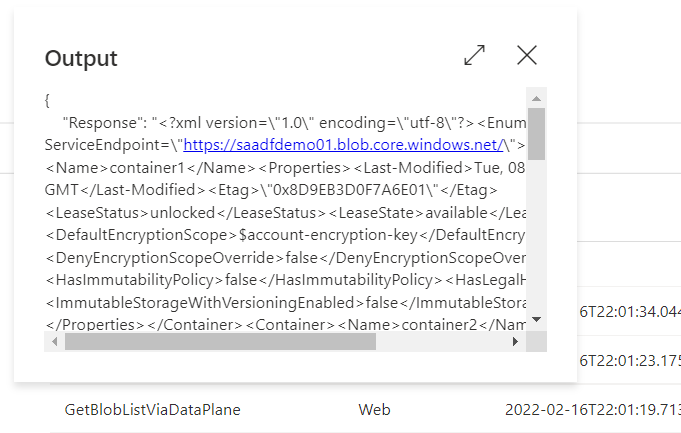

One of the gotchas of the Blob Service REST API is that the fact it works with XML payload:

At the time of writing it is not possible to negotiate the content type and work with JSON. It is somewhat unpleasant to work with XML in Azure Data Factory but it is nowhere near impossible. You have most likely noticed the Set variable activity that comes after the HTTP request. It initializes a variable of type array with the output of the following XPath query: @xpath(xml(activity('GetBlobListViaDataPlane').output.Response), '//Container/Name/text()'). The result is very compact, namely an array of container names: ["container1", "container2", ...].

Example 3: Retrieving a secret from Azure Key Vault

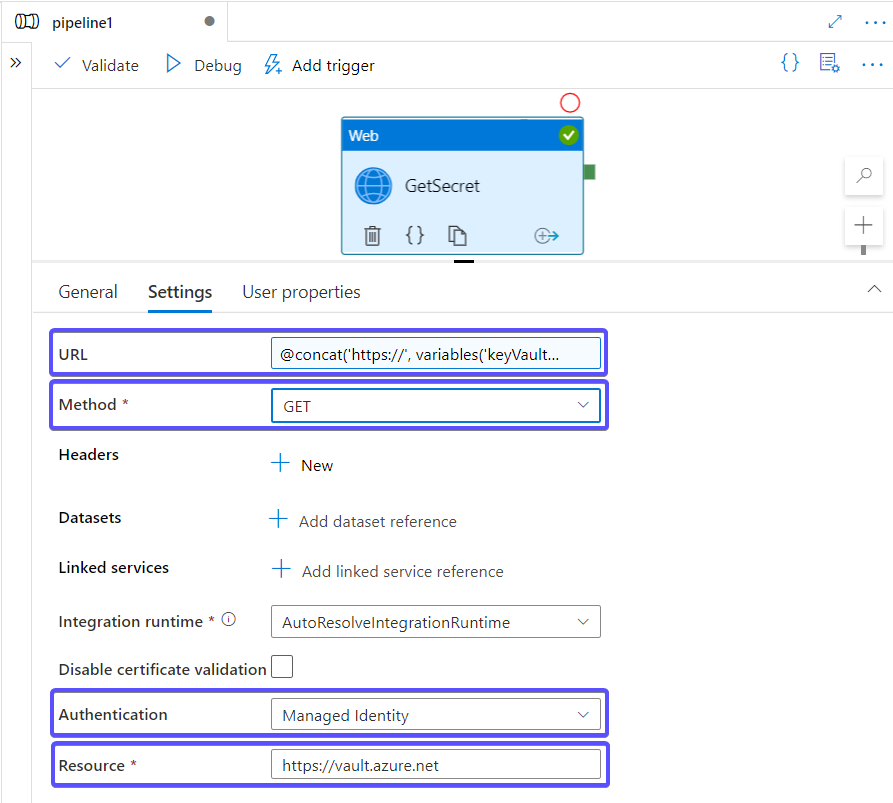

Although Azure Data Factory provides very good integration with Key Vault for configuring Linked Services, for example, it doesn't offer an activity that allows you to retrieve a secret for later use in the pipeline. Here comes the Get Secret operation which looks like this in the form of activity:

The URL from the screenshot above is set to @concat('https://', variables('keyVaultName'), '.vault.azure.net/secrets/', variables('secretName'), '?api-version=7.0').

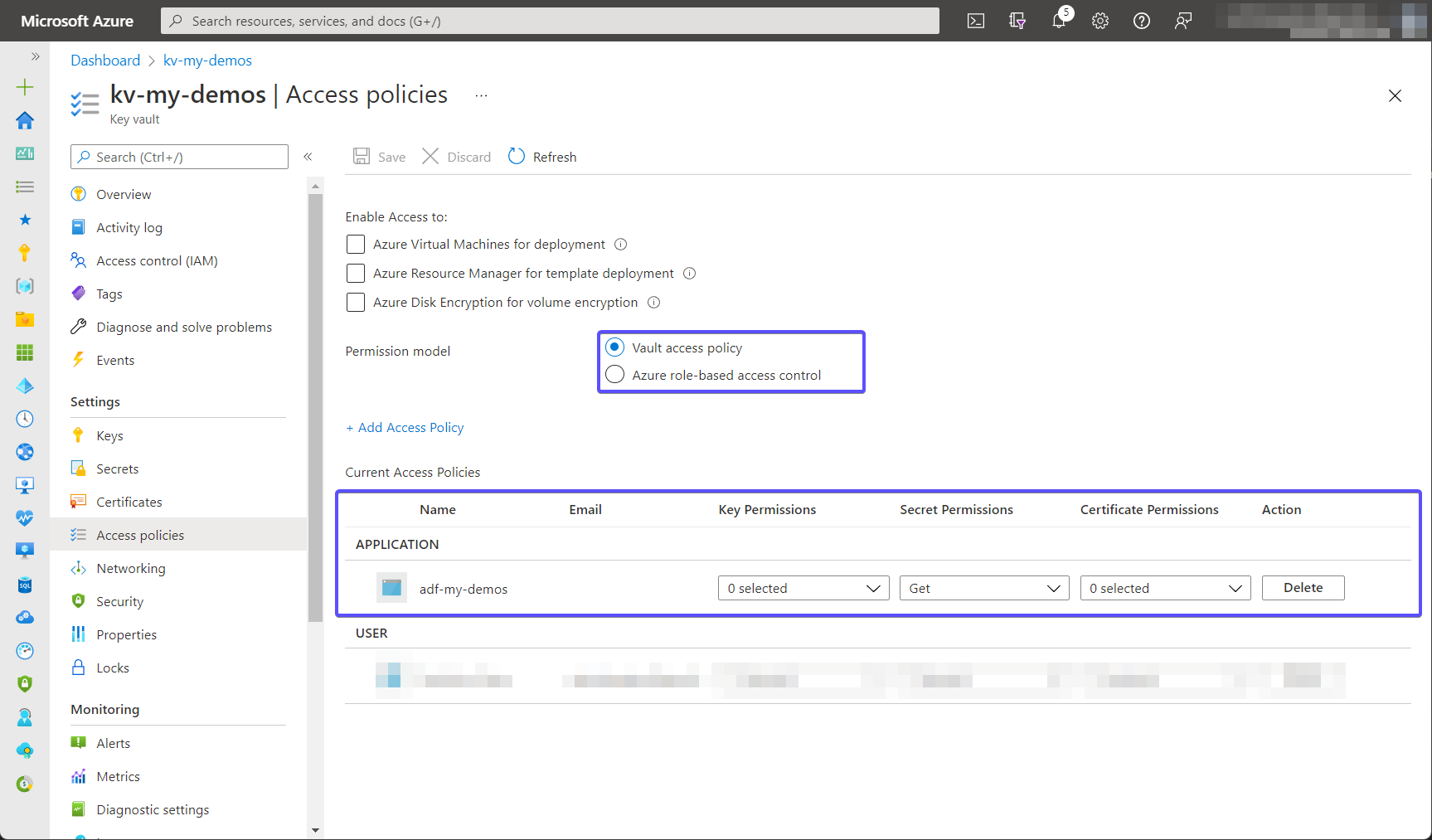

Then, as usual, some access-related configuration will be needed. In case your Key Vault leverages access policies as its permission model, there must be an access policy granting the Managed Identity of the Data Factory permission to read secrets:

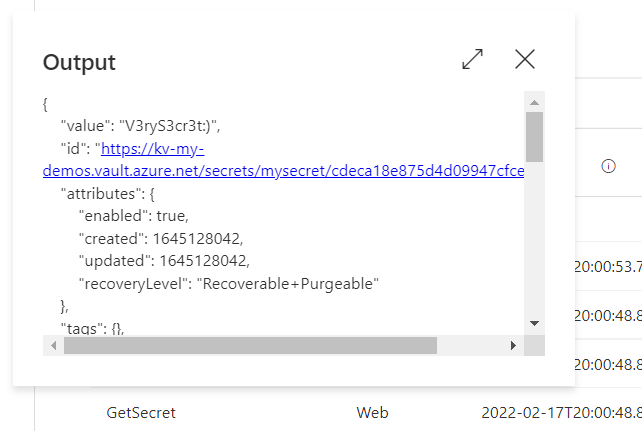

The output of the request looks like this:

REST API Reference

Here are some links that can help you find the API of interest:

Summary

In this post, I've shown how to execute Azure REST API queries right from the pipelines of either Azure Data Factory or Azure Synapse. Although the pipelines are capable of doing this, they shouldn't be used for any large-scale automation efforts that affect many Azure resources. Instead, it should be used to complement your data integration needs.